In Part I and Part II of this thread, I discussed the advantages of incorporating contract checks into one’s unit testing methodology. I thought it would be useful to include an example, so here it is . . .

To decide on the example, I first thought of the contract check I wanted to write – to check that a sorted list is actually sorted – and then worked my way backwards to figure out the routine that I wanted to write. Sorting routines are already available (qsort, anyone?), so it seemed a little silly to write one of those. Instead, I postulated someone writing a routine that would calculate the permutation needed to sort an array.

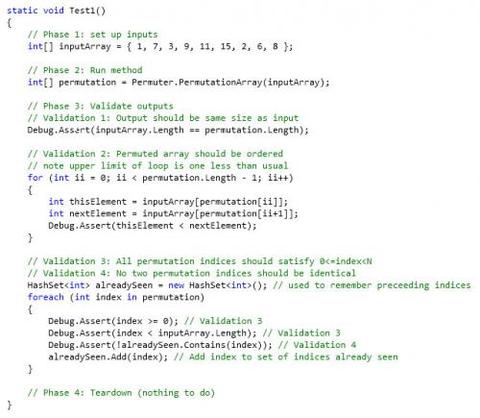

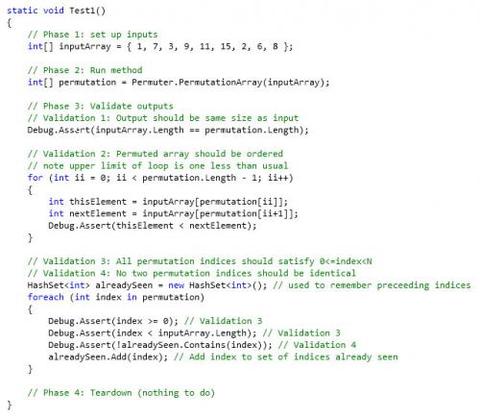

Since TDD is all the rage, here’s the test (I’m using C#):

Note the validation conditions after the routine has been called: that the permuted values actually are sorted (#2), and that the purported permutation array actually is a permutation (#1, #3, and #4 ). Each of these conditions is suitable as a contract check, since they must always be true, independent of data inputs.

The test-code design complexity issue that I’ve been talking about would come in if we wanted to write a second test, perhaps using a different data-set. In that case, we would either need to write a "design-and-validate" routine that performed phases 2 and 3 together (which is actually almost identical to introducing contract checks), or we would need to pull phase 3 out into another routine, then call it at the end of each test, or exercise some similar architectural cleverness in the test class(es).

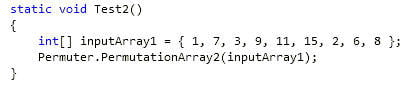

In contrast, if we turn the validation conditions into contract checks (by moving them into contract blocks in the routine), then the test now looks like this:

A lot simpler, eh? The significance is that we just removed a responsibility from the test code – the test code now is only responsible for driving an execution path within the routine; it no longer has responsibility for validating the results. Let me emphasize what I just said, because I didn’t realize it or its significance before I typed it up for this post. In order to solve a once-and-only-once problem, we just removed a responsibility from the test. This would indicate that the true root cause of the complexity is a violation of the Single Responsibility Principle (SRP) by the xUnit test classes – they’re responsible for setup, running, validating and tear-down. Each of the four phases is an independent axis of change; SRP says there should be only one (or was that the Highlander?) :)

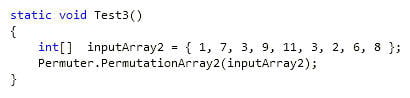

Ok, now it’s time for a question: How many of you noticed the bug in my contract check? As a hint, imagine what would happen if the routine were called with this input data instead:

This is the reason that I wanted to use something related to sorting in my example; it’s very easy to forget the possibility of two identical elements in the list that you’re sorting. (The bug, of course, is that validation 2 should have used "<=", rather than "<".) This is also the heart of why Test2 is a better way of doing things than Test1 – the contract checks in Test2 have a chance of picking up the oversight as a side-effect in another test. And if the oversight is detected, it is immediately localized to the permutation routine.

This is what I meant in my previous post about "Configuration Coverage" and "(No Need) for Cleverness" – including the validation code in the method gives us a much better chance (than external validation) of picking up and localizing the fact that we missed a subtlety.

Note that such a bug in the contract is important even if the routine works correctly; the fact that the contract is wrong means that the developer didn’t understand everything about the possible input configurations. There are several possible ways the routine could manage duplicate entries; the point is that the choice of behavior should be a conscious decision. Understanding these choices can lead to completely different choices of algorithm and (more importantly) client code behavior and assumptions.

Finding contract bugs is also important in a software-as-an-ecosystem analysis. Recall that the vast majority of defects in a major application like ACIS are not due to "happy path" failures of methods. Instead they come from low probability, hard-to-think-of data configurations (such as repeated list elements in the permutation example) generated in the environment of the application being run millions of times. One way to counteract this environmental dependency and increase robustness is to use provably correct algorithms. The problem is that these proofs rely on correctly understanding exactly those corner-cases which are so hard to think of; missing a case means that the correctness proof could be wrong and the algorithms susceptible to environmental changes.