In my last post, 3D Graphics and Internet Browsers, I gave an overview of the different technologies we have experimented with to provide the graphical viewport inside the web browser. Missing from the conversation was consideration in regards to performance, especially in dealing with the initial loading and viewing of industrial sized parts.

In my last post, 3D Graphics and Internet Browsers, I gave an overview of the different technologies we have experimented with to provide the graphical viewport inside the web browser. Missing from the conversation was consideration in regards to performance, especially in dealing with the initial loading and viewing of industrial sized parts.

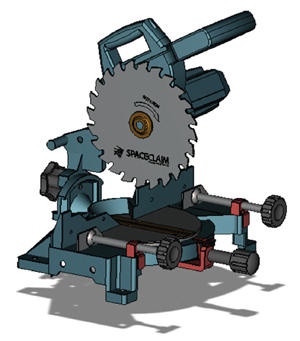

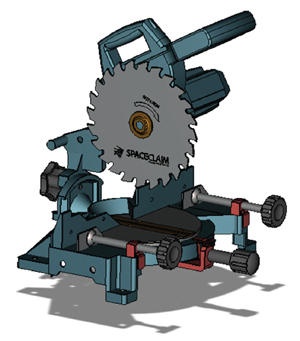

You can go to the site now and test performance. I’m sure experiences will vary as everybody’s internet connection is different. Our tests show (from working at home, coffee shops, etc.) that the SpaceClaim Saw, shown in Figure 1 on the left, can be loaded off the server, visualized and ready to model in anywhere from 5–10 seconds. (Sometimes longer if you have a really slow connection.) Now this part is small by today’s standards (45,000 facets). So I’m considering the performance we’re experiencing as somewhat pedestrian. We know the industry is going to demand better. We’ve directed our last bit of work in this direction; and this is what we have found.

The visualization data being sent to the browser is in the form of XML. I’ve mentioned we are using an XML schema called X3D. The key point here however is not really X3D; its XML. XML is represented as plaintext. Our first attempt at optimization (other than the basics; preprocessing the visualization data and having it stored alongside the b-rep, representing visualization data with four significant digits as opposed to eight, and optimizing the tessellation routines) was to simply byte compress the XML before sending it to the client. XML, based on its inherent structure, and being plaintext, compresses nicely. To do this, we had two choices; we could use IIS’s built in compression capabilities, or we could manually gzip the data for compression ourselves. Two weeks ago Spatial Labs was relying on IIS’s compression mechanism. Last week we reconfigured the site to gzip the data and then use the browser’s plug-in to decompress. The visual size of the SpaceClaim Saw prior to compression was 4.5 MB. The size after compression is 860 KB. That’s good, but upon testing, we learned that manually gzipping the file didn’t buy us any significant improvement over the built in compression in IIS. (It sped up a little; however, it’s hard to measure internet performance as establishing consistency over the net is difficult for obvious reasons.)

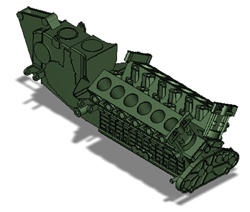

Digging into the problem a little further reached this obvious conclusion; it’s not just the time and size of the transmission, the plug-in was spending a great deal of time parsing the XML and converting the plaintext numerical data to actual doubles and integers. And looking at our visualization data; a healthy 90% of the data is really numbers. The performance hit becomes more apparent on the bigger models, such as the two engine blocks we have on Spatial Labs. The small engine block (98,000 facets) shown in Figure 2 on the right, can take up to 10-15 seconds, most of the time being spent on converting the plaintext data. (The compressed XML is 1.5 MB which should take only a couple of seconds to download.)

Digging into the problem a little further reached this obvious conclusion; it’s not just the time and size of the transmission, the plug-in was spending a great deal of time parsing the XML and converting the plaintext numerical data to actual doubles and integers. And looking at our visualization data; a healthy 90% of the data is really numbers. The performance hit becomes more apparent on the bigger models, such as the two engine blocks we have on Spatial Labs. The small engine block (98,000 facets) shown in Figure 2 on the right, can take up to 10-15 seconds, most of the time being spent on converting the plaintext data. (The compressed XML is 1.5 MB which should take only a couple of seconds to download.)

So this takes us down the path of using a binary format for our visualization data as opposed to any XML based plaintext format. Luckily our chosen XML format X3D supports a binary form, X3DB. However we now tie this all back to the plug-ins one chooses for your browser. Unfortunately not all X3D (VRML) browsers will accept and work with binary XML forms. If I had to rewrite my last post (discussing the various available plug-ins) I would include as a necessary condition the ability to work with binary data. But it doesn’t end here. There is one more piece to the puzzle that needs to happen. We’ve concluded binary is good, compression of the binary is better, but to get to the ultimate performance one could achieve you need to work with different forms of Geometric Compression as well. We’re not at a point where we can talk details now, however you can see this is a rich area with work dating back many years (Java3D). Hopefully in a future post, we’ll clearly address these technical challenges.

Concluding, and going back to my last post one more time; we speculated that the future was going the way of HTML5 and webGL; completely zero deployment and very much JavaScript based. Now this is where things get interesting. We can use the browser to decompress and we learned that it works well. However we will have to use JavaScript to parse the binary stream and feed it to WebGL calls. In addition, we have to write any geometric decompression logic with the JavaScript as well. It’s starting to place a pretty tall order on what you need to code in JavaScript. All of this adds up to some acknowledgment that browser plug-ins aren’t THAT bad.

Your thoughts?