In previous posts I mentioned the multiprocessing capabilities in CGM and how these differ from multi-threading in ACIS. I will now try to shed some light on this fundamentally different approach to providing an infrastructure with which application developers can leverage multi-core hardware.

CGM is neither thread-safe nor reentrant. This implies that one cannot utilize multiple threads to improve performance. The CGM infrastructure instead supports an efficient use of multiple processes to achieve this goal. This approach, probably the most utilized form of multiprocessing in the industry, has both strengths and weaknesses.

The ACIS and CGM geometric modeling engines are made up of millions of lines of source code. Making ACIS thread-safe was a significant undertaking requiring changes to many, if not most, source files. These changes, needed to support multi-threading, mainly addressed the serial nature of our algorithms. We simply weren’t thinking of parallelism during the design and initial development stages of the modeler (probably the case for CGM as well).

CGM took a different approach. Instead of changing code they chose to leverage the inherent abilities of modern operating systems to efficiently manage multiple processes and processors by way of concurrent execution of multiple instances of the modeler, each independent of the others.

Working with multiple processes requires inter-process communication to distribute tasks and gather results. In CGM, this is accomplished with custom scheduling and communication implementations utilizing socket level send and receive functions. This functionality is similar to that defined in the de facto standard Message Passing Interface. MPI is an inter-process management system that contains not only communication but also process management functionality.

Communicating between processes can impose a significant overhead, especially when modeling data is being sent back and forth. The CGM team developed their own system mainly because they had intentions to optimize communications wherever possible. The result is an implementation that avoids duplicate transmissions of fundamental CGM objects. A model and its supporting structures, having been sent once to a process, will not be sent again in subsequent communications.

The CGM multiprocessing infrastructure and all its subtleties are best conveyed with a simple example. The multi-body slice demonstration shown below, which I prepared for the recent European Forum, is good because it’s conceptually simple and yet highly applicable.

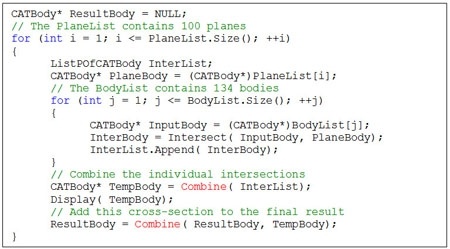

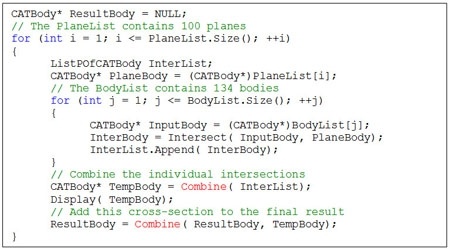

In this example we compute 100 cross sections through an Airplane model containing 134 bodies, combining the individual intersections of each body for each planar slice into a wire body. These are then displayed, and when complete are all combined into a single result body.

In the original sequential algorithm we had an outer loop to iterate over the slices and an inner loop to iterate over the bodies. In each pass, we created a slicing plane, computed the intersections for each body, and combined and displayed the results. This is the quickest way to compute the cross-sections. Below is a simplification of the original algorithm.

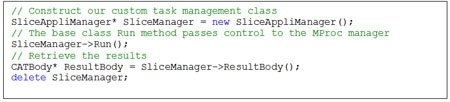

To utilize the CGM multiprocessing infrastructure (called MProc) we had to change things a bit. We first derived a custom task management class from the AppliManager base class, which is responsible for task creation and processing. Then we derived a custom operator class from the AppliOperator base class, which embodies the operation to be performed. With this in place we were able to instantiate our custom task manager, run it, and retrieve our results. These changes replaced the original code of the sequential workflow mentioned above. Below is a simplification of the task management operation.

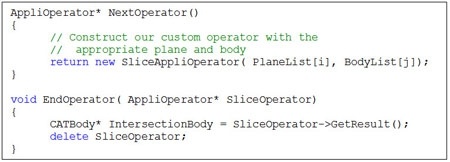

The client task management class has two main virtual methods to implement: NextOperator and EndOperator. The former is called by the MProc system to create a task, the latter to retrieve the results of the completed operation. In our example, the NextOperator method constructs our custom operator containing the appropriate body and plane to be intersected. The EndOperator method retrieves the results, the intersection, before deleting the operator. Below is a simplification of the task management class methods.

The AppliManager base class Run method passes control to the MProc manager to schedule the tasks on available processes, or on the main process if none are available. It also passes completed operators to EndOperator for processing and clean-up. This continues until all tasks are completed. The custom task management class also contains data members and logic to select the appropriate plane and body for the current operation, and to manage the results. This is similar to the original algorithm, in that we use variables (i and j) to track the current plane and body in the loop iterations.

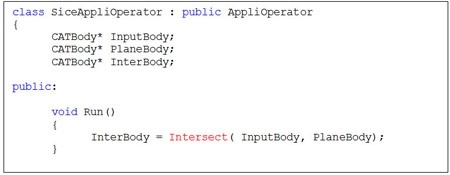

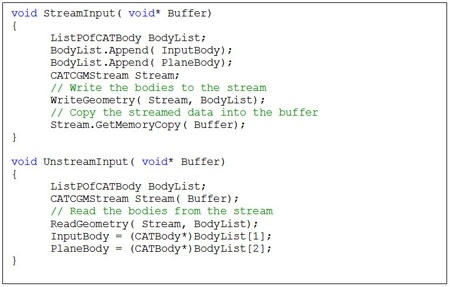

The custom operator is a bit more complex because it not only contains data members for the inputs and outputs, but also the code for the operation as well as code to “stream data”. Below is a simplification of part of the custom operator class.

The bits mentioned so far are all that are technically needed to complete the entire operation without multiprocessing. That is serially, without utilizing additional processes, which would be the case on single processor machines. In this case, the MProc manager simply calls the custom operator class Run method to compute the results, which provides consistent behavior without requiring additional code to handle the serial workflow.

When multiple processes are utilized, we need the data, both inputs and outputs, to be formatted in a way that lends itself to inter-process communication. This is simply organizing the data into a contiguous chunk of memory, in order for each transmission to be of one piece of data. We call this streaming. The streaming of bodies (geometry and topology) is a core functionality of CGM.

Four additional methods are required in the custom operator class to handle all streaming needs. Two are exercised on the master process, StreamInputs and UnstreamOutputs. These stream the body and plane into a send buffer, and unstream the result body from a received buffer respectively. The other two are exercised on the slave process, UnstreamInputs and StreamOutputs. These similarly unstream the body and plane from the received buffer, and stream the result body to the send buffer. These methods are called by the MProc infrastructure as needed to facilitate inter-process communication using sends and receives. Below is a simplification of two of the streaming methods.

Overall, utilizing the MProc infrastructure is relatively straightforward. However, one must be aware of the overhead of inter-process communication before putting it to use. Workflows with complex operations will usually scale well because the cost of the operation outweighs the overhead of communication. As an example, adding multiprocessing to the face-face intersection phase of the CGM Boolean algorithm proved beneficial. Here surface pairs are distributed to slave processes where the intersections are computed and sent back to the master process. The multi-body slice example discussed herein also benefited, as the overall computation time was reduced from 36 to 9 seconds on an 8 core system.

The new MProc infrastructure will be available in a future release of the CGM Component,. We like to say “the proof is in the pudding”, and hope our customers have opportunities to try it out and find unique ways to benefit from this new functionality.

Note: I’ve taken liberties to simplify the example code shown above. The functions Combine and Intersect for example, do not exist as such in CGM. This functionality is available through public operators.

.jpg?width=450&name=Application%20Lifecycle%20Management%20(1).jpg)